Artificial Intelligence (AI) has made remarkable strides in recent years, revolutionizing various fields, from healthcare to entertainment. One prominent area of AI research is reinforcement learning, a paradigm where agents learn to make decisions by interacting with their environment. Q-Learning, a popular algorithm in reinforcement learning, plays a crucial role in training AI agents or Non-Player Characters (NPCs) in virtual environments like Unreal Engine 5. At GenXP we deeply value the importance of Q-Learning in AI research. Q-Learning is particularly effective when applied to NPCs in Unreal Engine 5. This methodology effectively turns any NPC into a full fledged AI Agent capable of interacting with a dynamic environment without needing to know everything about that environment.

Let’s consider our Case Study where a user Prompts the following:

PROMPT: “Generate a fireman that works at a firestation and can put our fires.”

What are the processes necessary to accomplish this task in 3D? Putting the Generative Content aside for a moment – that is another topic. Let’s consider the environment is procedurally generated for us and the Metahuman is generated as a typical Firefighter. The Metahuman has a skeleton and animations that allow us to move within the environment. What else would it need? It would need the ability to understand, learn, and interact with the environment. While the environment itself would have triggers and geometry to trace against – the biggest challenge is what model do we use to learn? That model we believe is Q-Learning. The core of the Q-Learning algorithm is the Bellman equation.

Orbit Platform Tracks All Props in the World for Q-Learning

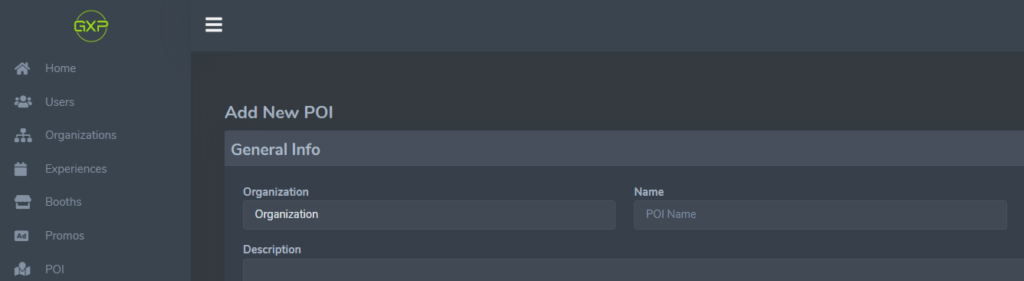

At GenXP we developed a tagging system for all props in Unreal Engine 5. This tagging system is supported via our Orbit platform. Orbit can keep track of all of the props in the world and when the AI needs to learn about such a prop it can simply learn from Orbit. This ability gives our AI super powers over stock UE5 as it does not have a system like Orbit. Below is a screenshot of a Point of Interest item in our Orbit Platform. This POI exists and is tracked within Unreal Engine 5. Any POI is dynamic and can be managed within the Unreal Engine environment either manually via a designer or procedurally by the computer.

When we bring Orbit and Q-Learning together we have a very powerful system for AI Agents to learn about the environment they exist in and understand how to interact with that environment without requiring programmers or designers to create a sea of Level scripts dictating how the AI interacts with the environment.

In our Firestation example we could easily imagine the Firetruck, Firehose, Firehouse, all being POIs in the environment. POIs don’t have to be just static things in the world. They can also be dynamic things such as other NPCs, drivable cars, animals, particles like fire – basically anything that can move or spawn on its own.

Now with these items tag Q-Learning can be used to train the Fireman to pickup the Firehose and put out the Fire and then drive the Firetruck back to the Firestation. This can be achieved without having to script the entire process making it much more realistic for anyone to Generate a 3D scenario with an AI Agent.

While this technology is still in development at GenXP we felt it was important to begin discussing what we are working on and why we are working on it. Below we delve deeper into Q-Learning and why it is so important to this process and how we integrate with Unreal Engine 5.

Q-Learning in AI Research

Q-Learning is a model-free reinforcement learning algorithm that enables agents to make decisions in an environment to maximize a cumulative reward. Unlike other machine learning approaches, Q-Learning does not require explicit knowledge of the environment’s dynamics, making it versatile and applicable to a wide range of scenarios.

One key feature of Q-Learning is its ability to handle complex and dynamic environments. The algorithm employs a Q-table, a matrix that stores the expected cumulative rewards for each action in a given state. Through an iterative process of exploration and exploitation, the agent refines its decision-making policy, gradually converging towards an optimal strategy.

The significance of Q-Learning lies in its ability to strike a balance between exploration and exploitation, essential for agents to adapt to changing conditions and unforeseen challenges. This characteristic makes Q-Learning suitable for training AI agents to navigate complex, dynamic environments, a critical requirement in applications ranging from robotics to video games.

Integration with Unreal Engine 5 for NPC Training

Unreal Engine 5, a state-of-the-art game development platform, provides a rich and immersive environment for training NPCs using Q-Learning. The engine’s advanced graphics, physics, and AI capabilities make it an ideal playground for developing intelligent agents that can interact seamlessly with users.

Metahumans, 3D digital humans created with Unreal Engine’s MetaHuman Creator, add an extra layer of realism to the NPCs. Q-Learning, when applied to these Metahuman-based NPCs, allows for the creation of lifelike characters capable of learning and adapting their behavior based on user interactions. This combination enhances user engagement in gaming and virtual environments, creating a more immersive and dynamic experience.

The integration of Q-Learning with Unreal Engine 5 offers a powerful framework for training NPCs that goes beyond traditional scripted behaviors. The adaptability of Q-Learning aligns well with the dynamic nature of video games, where NPCs need to respond intelligently to player actions and evolving game scenarios.

Q-Learning stands out as a pivotal algorithm in AI research, particularly when applied to the training of NPCs in virtual environments like Unreal Engine 5. The algorithm’s ability to learn from interactions and adapt to dynamic conditions makes it a cornerstone for creating intelligent agents capable of engaging users in a lifelike manner. The synergy between Q-Learning and Unreal Engine 5, with its Metahuman-based NPCs, marks a significant advancement in the field of AI-driven interactive experiences, promising a future where virtual characters can truly understand and respond to user behavior.